< GO BACK

How to Create the Bank of You: Cognitive Dissonance in the World of Banking – Could We Close the Intention-Action Gap with the Use of AI-assisted “Motivation Engine”?

April 7, 2025

/

GENERAL

Cognitive Dissonance in the World of Banking – Could We Close the Intention-Action Gap with the Use of AI-assisted “Motivation Engine”?

Cognitive Dissonance: the state of having inconsistent thoughts, beliefs, or attitudes, especially as relating to behavioral decisions and attitude change.

You want to be healthy, but you don't exercise regularly or eat a nutritious diet, late night fridge is too tempting. The majority feel guilty as a result. You know that smoking or drinking too much is harmful to your health, but you do it anyways. Human actions or inactions are mostly a consequence of subconscious processes (motivation), and as C.J. Jung said, “until you make the subconscious continuous, it will direct your life, and you will call it fate”.

Why do We Fail to do What We Know is Right for Us?

Now apply this logic to “financial health”. People generally want to have more money saved (security motivation), invest smarter (let money work for us, and not only vice versa), and cut back on unnecessary spending (and boy, there is a lot of that). Yet, behavioural data across the industry shows that despite good intentions, financial actions often paint a very different picture.

In fact, almost half of the people in already developed economies have less than 1.000 EUR in emergency savings, money doesn’t work for them, they work for money. Their intentions are always good, but there is a failure to act. Why?

The answer lies in the psychological chasm between intention and action.

The Cognitive Dissonance within the Modern Banking

Everybody wants financial security. But what we actually do is often driven by short-term gratification, peer influence, digital nudges, and emotion-driven responses. This isn't irrational at all. It's just human.

Banks sit on vast quantities of consumer data, and in the modern banking ecosystem some banks are becoming very data-driven when deploying these data sets in their commercial strategies. AI can help a lot, and it does. Neobanks readily build credit scoring systems to assess whether they should lend you money or not, regardless how your “tax authorities-reported” spending looks like. Incumbents rely on client historical data pools, because they have access to them. But all this is optimized for tracking financial behaviour rather than changing it. Banks adapt their business moves to what people do, how they earn and how they spend the money. To an extent they try to assist clients with directing their funds allocations in a more sensible way through personal advice (not even applicable to neobanks), but for those that don’t have much to allocate, they don’t help them do better. Some of us in the industry can be more empathetic than others, but in the end, it’s your life, your money.

Could we use a mix of AI and human-instilled psychology in a way to do more than automate, basically to motivate?

Introducing the AI Motivation Engine

Imagine a system that doesn't just show you your financial data, but understands your psychology, predicts your behavioural patterns, and constantly nudges you gently toward your own goals if you allow it. Not through coercion or gamification, but through personalized, adaptive guidance.

Let’s call this the AI Motivation Engine.

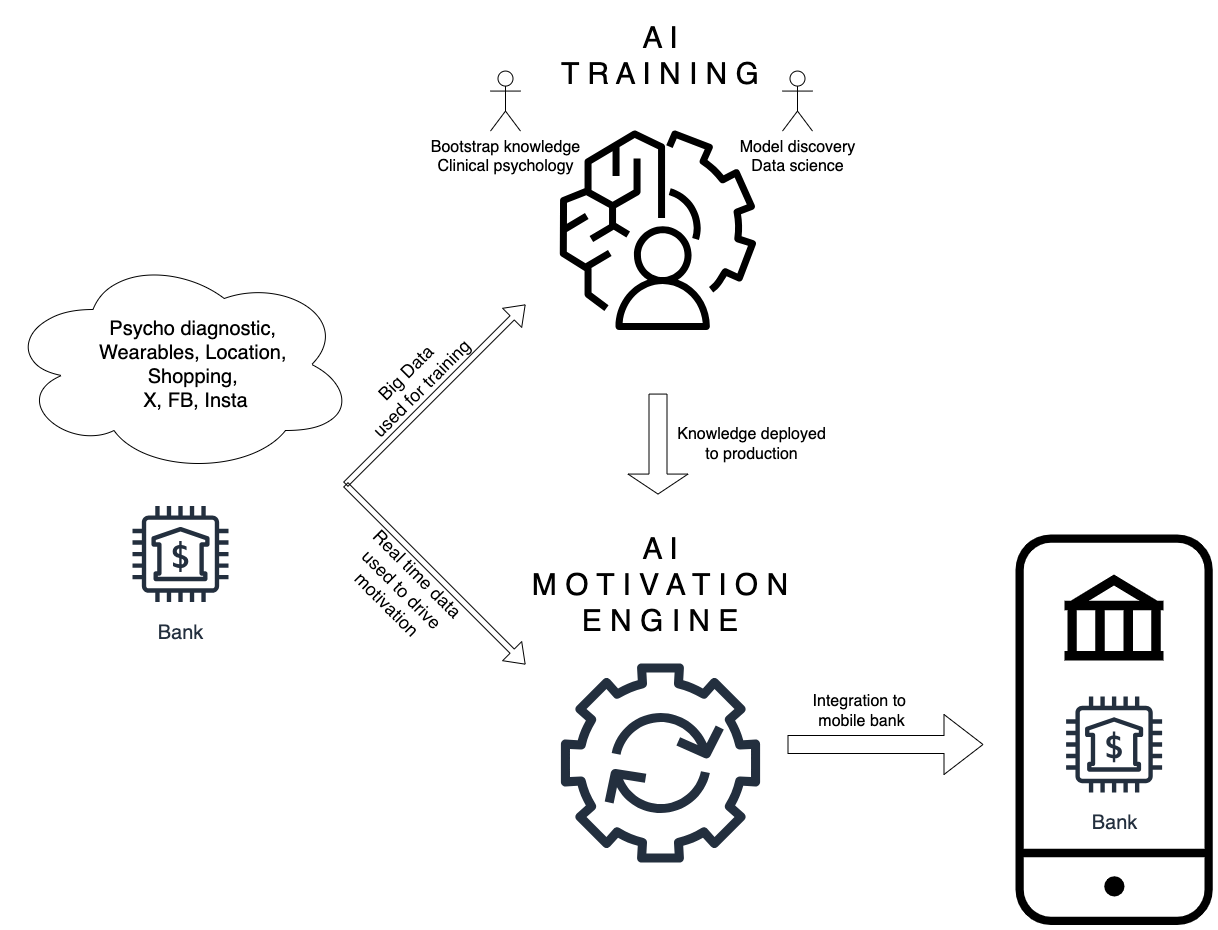

Such “engine” is a sort of AI software, an architecture based on human clinical psychology inputs and expertise. It takes in a mix of various signals from an individual's context, predicts possible behaviours of an individual and tries to influence that behaviour subject to individual’s own key goals.

In a nutshell, it takes:

- Behavioural signals: from spending habits to sleep patterns

- Psycho-diagnostic data: based on micro-decisions and behavioural patterns in a digital world, helping you build macro profile over time

- Contextual inputs: location, mood, wearable data

And feeds all of them into a banking app environment as inputs, transforming them into real-time, hyper-personalized questions, serving them to you via chosen (digital) channel as outputs:

- "You said you wanted to save 500 EUR/month. Based on your spending so far, shall we move 100 EUR today to a short-term deposit?"

- "Your shopping expenses are going up, and you said you are thinking of buying a new apartment, so your credit card debt should be lower if you target to repay a mortgage instalment of 1.500 EUR/month which will allow you to buy an apartment worth 350.000 EUR. Want to temporarily freeze your card after midnight for this week?"

- "You’re spending less this week than usual—great progress! Shall we boost your savings goal by 5% this month?"

This isn’t sci-fi. It’s data science plus clinical psychology, deployed responsibly. It is basically a liaison and recommendation system, remining you of your own goals, keeping you motivated to achieve them. Graphically it looks like this:

The Motivational Engine is not “it works” or “it does not work” kind of a system. It’s aimed to provide gradual overall improvements in one person’s behaviour, hence bringing the unconsciousness to open and manifesting it to the person to an extent possible. It is using not only classical bank’s data feeds, but is augmented by other human context: psychology, wearable data and similar feeds. When applied to a large enough client base, financial behaviour patterns can have large positive consequences for both the bank and its clients.

Is there a Way of Turning Digital Banks into Cognitive Coaches?

I think the future of banking isn’t just slick UI or lower rates for the clients. It’s about becoming a partner in self-actualization. Too ambitious of a plan for a bank?

Digital platforms in general could have the ability to:

- Identify moments of micro-friction between long term intent and current action

- Close the loop with feedback that feels natural, human, and non-invasive

- Encourage small wins that add up to major life shifts

This is especially important for younger generations, who don’t care much about banks and more attuned to platforms that seem to understand them.

From Big Brother Surveillance to Empowerment: The Ethics of Such Motivational Nudges

Let’s be clear: not all nudges are created equal. The same tools that can guide someone to better financial health can also be used to exploit, manipulate, or distract.

That’s why the AI Motivation Engine will be built on:

- Transparency: users should know how and why they’re being nudged

- Consent: personalization should be opt-in and adjustable

- Alignment: nudges must serve the users their clearly shared and stated goals, not just any bank KPIs

This is the difference between a bank acting as a coach and a platform acting as a manipulator.

Is This an Actual Opportunity for Banks?

Self-aware forward-looking execs of banks are being “pushed” into constant transition by the evolution of technology. But ultimately, they all want a happy and loyal clientele, and in some ideal scenario, an indispensable background role in helping clients to achieve their “financial freedom”. And if that role gives them the opportunity to offer real-time behavioural feedback loops to their clients, deepening the emotional connection with them, the Motivation Engine idea should resonate well.

Final Word: “The Bank of You”

The next great bank won’t just store your money and lend you money so that you can use its’ benefits now -> now while you live “at peak” and not when you are old and have finally saved enough, but you have not lived.

Instead, it should understand your aspirations, your fears, your patterns.

It should coach you through your habits, celebrate your wins, and quietly help you become the person your best self is already aiming to be. And while doing that, it should stand in the background, as a permanently friendly utility ecosystem.

And it could do this not with generic advice, but with machine-assisted, yet humanly interpreted empathy.

If this resonates, let’s talk.